前言

作为新博客的第一篇,就用卡渲作为开篇叭!毕竟是个二次元乐。本篇同步发表于http://chenglixue.top/index.php/unity/73/

之前使用UE的后处理做过简单的卡渲,但因其灵活性很差,很多操作都需涉及到更改管线,且奈何本人在校用的笔记本,一次build就得好久,因此放弃对卡渲的深入。如今对URP基本的使用掌握的差不多,是时候深入研究卡渲了

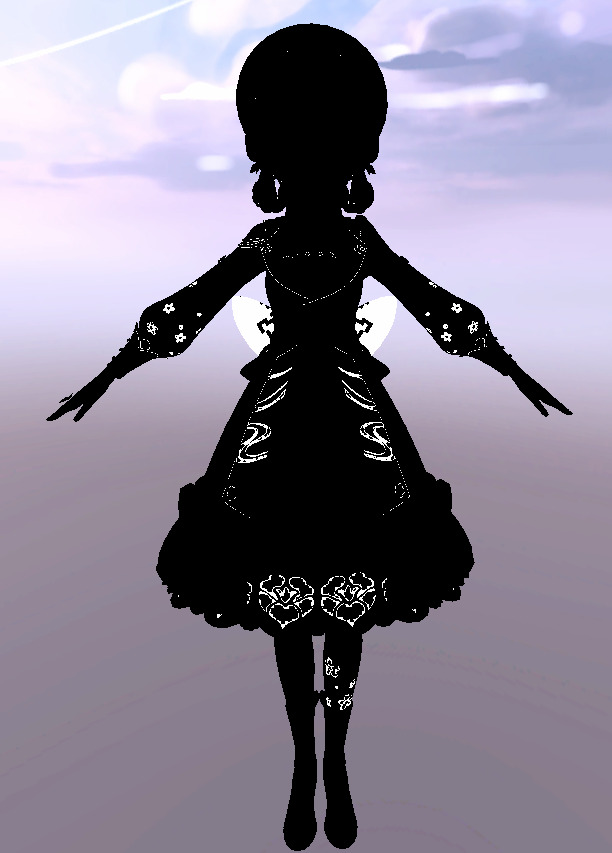

本篇是卡渲篇的首篇,介绍角色的渲染方式,主要参考原神的方式。

开发环境

- Unity 2021.3.32f1 下的URP

- Win 10

- JetBrains Rider 2023.2

材料准备

虽然模之屋提供了一些角色的模型和纹理,但很明显这其中缺少Light map和ramp Texture,怎么办呢?笔者这里通过b站up主白嫖的(不是)

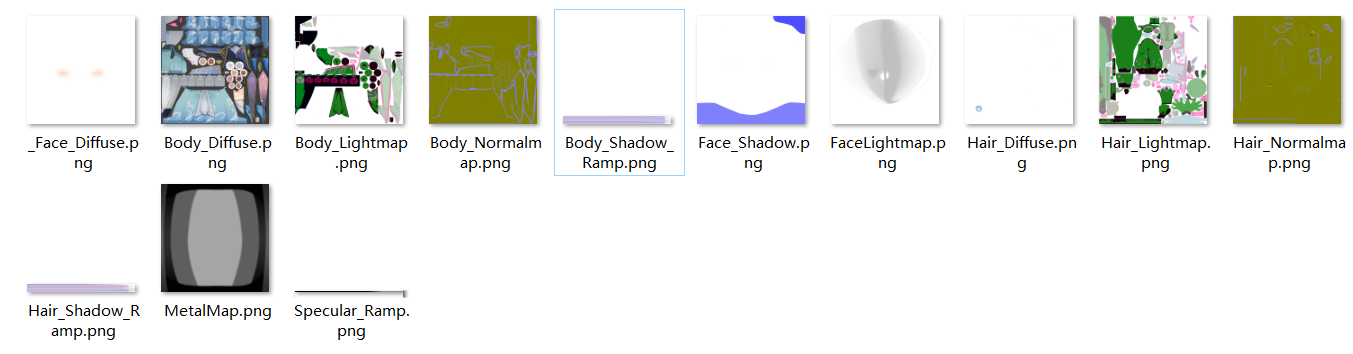

本次使用的神里绫华花时来信的模型和纹理(神里大小姐她真的太优雅了!),使用的纹理如下

部分Tex介绍

Light map

- R channel:高光类型的层级,根据阈值使用不同的高光类型

- G channel:Shadow AO Mask

- B channel:高光强度Mask

- A channel:Ramp的层级,根据阈值选择不同的Ramp

Diffuse

这一小节主要针对Diffuse部分

简单的Lambert

先热身,使用基础的half Lamber即可

实现

{ Properties { [Header(Diffuse Setting)] _DiffuseMap("Diffuse Texture", 2D) = "white" {} _DiffuseTint("Diffuse Color Tint", Color) = (1, 1, 1, 1) } SubShader { Tags { "Pipeline" = "UniversalPipeline" "RenderType" = "Opaque" "Queue" = "Geometry" } HLSLINCLUDE #include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Core.hlsl" #include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Lighting.hlsl" #include "Assets/Shader/MyUtil/MyMath.hlsl" CBUFFER_START(UnityPerMaterial) float4 _DiffuseMap_ST; half4 _DiffuseTint; CBUFFER_END TEXTURE2D(_DiffuseMap); SAMPLER(sampler_DiffuseMap); struct VSInput { float4 positionL : POSITION; float4 normalL : NORMAL; float4 tangentL : TANGENT; float4 color : COLOR0; float2 uv : TEXCOORD0; }; struct PSInput { float4 color : COLOR0; float4 positionH : SV_POSITION; float4 normalW : NORMAL; float4 tangentW : TANGENT; float4 bitTangentW : TEXCOORD0; float4 shadowUV : TEXCOORD1; float4 uv : TEXCOORD2; float3 viewDirW : TEXCOORD3; }; PSInput ToonPassVS(VSInput vsInput) { PSInput vsOutput; vsOutput.positionH = TransformObjectToHClip(vsInput.positionL); float3 positionW = TransformObjectToWorld(vsInput.positionL); vsOutput.normalW.xyz = TransformObjectToWorldNormal(vsInput.normalL); vsOutput.viewDirW = GetCameraPositionWS() - positionW; vsOutput.uv.xy = TRANSFORM_TEX(vsInput.uv, _DiffuseMap); return vsOutput; } half4 ToonPassPS(PSInput psInput) : SV_TARGET { half3 outputColor = 0.f; half4 diffuseTex = SAMPLE_TEXTURE2D(_DiffuseMap, sampler_DiffuseMap, psInput.uv) * _DiffuseTint; float3 normalW = normalize(psInput.normalW); float3 viewDirW = normalize(psInput.viewDirW); Light mainLight = GetMainLight(); float3 mainLightDirW = normalize(mainLight.direction); half3 mainLightColor = mainLight.color; float NoL = saturate(dot(normalW, mainLightDirW)) * 0.5 + 0.5; half3 diffuse = diffuseTex.rgb * NoL * mainLightColor; outputColor += diffuse; return half4(outputColor, diffuseTex.a); } ENDHLSL// ------------------------------------------------------------- Toon Main Pass ------------------------------------------------------------- Pass { NAME "Toon Main Pass" Tags { "LightMode" = "UniversalForward" } HLSLPROGRAM #pragma vertex ToonPassVS #pragma fragment ToonPassPS ENDHLSL } }}效果

Ramp

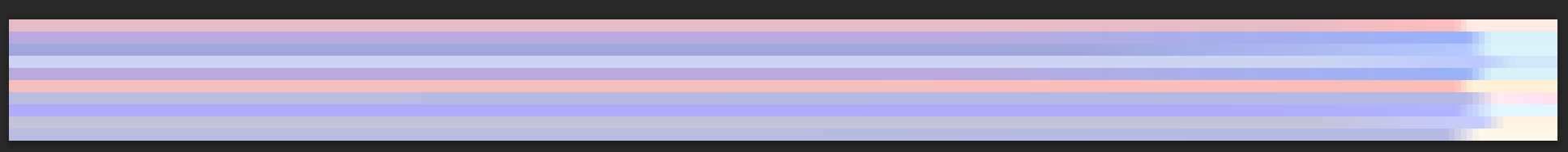

学过Ramp贴图的小伙伴,应该都知道在处理光照表现时,Ramp根据贴图的分层来控制光照的分层,如在原神中,Diffuse的暗部由Diffuse * RampTex,而亮部就为”Diffuse”,不过实际实现并没有这么简单。更多的,原神的Ramp Tex从上到下分为多层,以笔者手中的为例(像素为256 * 20,有些并不是这样),Ramp有20层,主要分为冷色调阴影和暖色调阴影,各占5 * 2,分别对应夜晚和白天

整体Ramp(这里拿到的冷色调和暖色调贴图上下颠倒了,因此笔者在PS进行了处理)。最右边有明显的分界,正是由此实现明暗分界

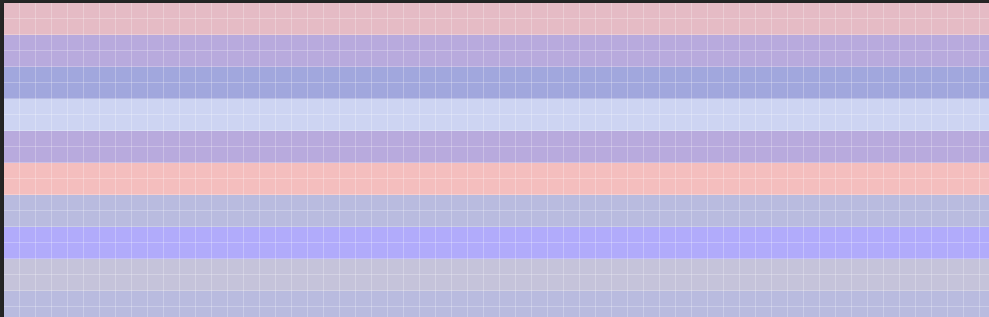

放大从左边看,从上到下,暖色调5 * 2,冷色调也是5 * 2

日夜判断:因为Ramp Tex分别对应白天和夜晚,所以这里可以增加一个昼夜判断的变量(根据光源方向的角度)

Lightmap.a:ramptexture 的分层(笔者的lightmap.a对应信息如下,可能不同的不一样)

0.0:hard/emission/specular/silk/hair

0.0 – 0.3:soft/common

0.3 – 0.5:metal

0.5 – 0.7:皮肤

1.0:布料

实现

[Header(Shadow Setting)][Space(3)]_LightMap("LightMap", 2D) = "black" {}_LightShadowColor("Light Shadow Color", Color) = (1, 1, 1, 1)_DarkShadowColor("Dark Shadow Color", Color) = (1, 1, 1, 1)[Space(30)][Header(Shadow Ramp Setting)][Space(3)][Toggle(ENABLE_SHADOW_RAMP)]_EnableShadowRamp("Enable Shadow Ramp", Float) = 0_RampMap("Ramp Texture", 2D) = "white" {}_RampShadowRang("Ramp Shadow Range", Range(0, 1)) = 0.5// 控制ramp明暗分布half4 _LightShadowColor;half4 _DarkShadowColor;float4 _RampMap_ST;float _RampShadowRang; float DayOrLight; // 根据角度判断夜晚还是白天TEXTURE2D(_LightMap); SAMPLER(sampler_LightMap);half4 ToonPassPS(PSInput psInput) : SV_TARGET{ //... half4 lightMapTex = SAMPLE_TEXTURE2D(_LightMap, sampler_LightMap, psInput.uv.xy); DayOrLight = dot(float3(0, 1, 0), -mainLightDirW); #if ENABLE_SHADOW_RAMP // 防止采样边缘时出现黑线 float rampU = NoL * (1 / _RampShadowRang - 0.003); float rampVOffset = DayOrLight > 1/2 && DayOrLight < 1 ? 0.5 : 0.f; // 白天采样上面,夜晚采样下面 // 从上向下采样 half3 shadowRamp1 = SAMPLE_TEXTURE2D(_RampMap, sampler_RampMap, float2(rampU, 0.45 + rampVOffset)); half3 shadowRamp2 = SAMPLE_TEXTURE2D(_RampMap, sampler_RampMap, float2(rampU, 0.35 + rampVOffset)); half3 shadowRamp3 = SAMPLE_TEXTURE2D(_RampMap, sampler_RampMap, float2(rampU, 0.25 + rampVOffset)); half3 shadowRamp4 = SAMPLE_TEXTURE2D(_RampMap, sampler_RampMap, float2(rampU, 0.15 + rampVOffset)); half3 shadowRamp5 = SAMPLE_TEXTURE2D(_RampMap, sampler_RampMap, float2(rampU, 0.05 + rampVOffset)); /*0.0:hard/emission/silk/hair0.0 - 0.3:soft/common0.3 - 0.5:metal0.5 - 0.7:皮肤1.0:布料*/ half3 frabicRamp = shadowRamp1 * step(abs(lightMapTex.a - 1.f), 0.05); half3 skinRamp = shadowRamp2 * step(abs(lightMapTex.a - 0.7f), 0.05); half3 metalRamp = shadowRamp3 * step(abs(lightMapTex.a - 0.5f), 0.05); half3 softRamp = shadowRamp4 * step(abs(lightMapTex.a - 0.3f), 0.05); half3 hardRamp = shadowRamp5 * step(abs(lightMapTex.a - 0.0f), 0.05); half3 finalRamp = frabicRamp + skinRamp + metalRamp + softRamp + hardRamp; #endif return half4(finalRamp, 1.f);}效果

判断分离明暗

shadow具体的占比知道了,现在需要求哪一部分是明,哪一部分是暗。很容易想到利用half lambert(代码中的NoL),但问题是和谁比较呢?在上面,笔者用”_RampShadowRang”来控制ramp的明暗分布,而half lambert也同样控制着明暗分布,因此可以通过比较_RampShadowRang和half lambert来判断明暗

实现

// 判断明暗half rampValue = 0.f;rampValue = step(_RampShadowRang, NoL);half3 rampShadowColor = lerp(finalRamp * diffuseTex.rgb, diffuseTex.rgb, rampValue);效果

很明显的阴暗交界

Specular纹理分析

高光分层

先来看看lightmap.r也就是specular阈值情况(这里为了数值更精确,乘上了255)

- 200 – 260

- 150 – 200

- 100 – 150

- 50-100

- 0-50

不难看出,在50-100 和 100-150有不少重复部分,这两部分个人更趋向于合并一起处理,否则高光可能会忽明忽暗

- 200 – 260

思路

- 为了后续方便改动美术效果,这里最好是将这些不同层级的材质分开实现对应的高光,尤其是头发和金属高光很重要,分层实现是十分有必要的

- 为了控制高光表现,会大量使用控制高光范围的变量_SpecularRangeLayer和高光强度_SpecularIntensityLayer

实现前两层

为了二分色阶,需要对NoH使用step()来分离明部和暗部

_SpecularRangeLayer("Specular Layer Range", Vector) = (1, 1, 1, 1) // 用于分离高光的明部和暗部_SpecularIntensityLayer("Specular Layer Intensity", Vector) = (1, 1, 1, 1)// 每层的高光强度// 是否启用specular mask[Toggle(ENABLE_SPECULAR_INTENISTY_MASK1)] _Enable_Specular_Intensity_Mask1("Enable Specular Intensity Mask 1", Int) = 1[Toggle(ENABLE_SPECULAR_INTENISTY_MASK2)] _Enable_Specular_Intensity_Mask2("Enable Specular Intensity Mask 2", Int) = 1[Toggle(ENABLE_SPECULAR_INTENISTY_MASK3)] _Enable_Specular_Intensity_Mask3("Enable Specular Intensity Mask 3", Int) = 1[Toggle(ENABLE_SPECULAR_INTENISTY_MASK4)] _Enable_Specular_Intensity_Mask4("Enable Specular Intensity Mask 4", Int) = 1[Toggle(ENABLE_SPECULAR_INTENISTY_MASK5)] _Enable_Specular_Intensity_Mask5("Enable Specular Intensity Mask 5", Int) = 1#pragma shader_feature_local ENABLE_SPECULAR_INTENISTY_MASK1#pragma shader_feature_local ENABLE_SPECULAR_INTENISTY_MASK2#pragma shader_feature_local ENABLE_SPECULAR_INTENISTY_MASK3#pragma shader_feature_local ENABLE_SPECULAR_INTENISTY_MASK4#pragma shader_feature_local ENABLE_SPECULAR_INTENISTY_MASK4#pragma shader_feature_local ENABLE_SPECULAR_INTENISTY_MASK5// Specularhalf3 stepSpecular1 = 0.f;float specularLayer = lightMapTex.r * 255; // 数值更精确float stepSpecularMask = lightMapTex.b;if(specularLayer > 0 && specularLayer 50 && specularLayer < 150){ stepSpecular1 = step(1 - _SpecularRangeLayer.y, NoH) * _SpecularIntensityLayer.y; #if defined ENABLE_SPECULAR_INTENISTY_MASK2 stepSpecular1 *= stepSpecularMask; #endif stepSpecular1 *= diffuseTex.rgb;}金属层

金属层需要配合金属光泽贴图,也就是Matcap。为了达到金属高光随相机视角变化而变化的效果,需要将normal变换至view space,并用该空间下的坐标采样metal texture

_MetalMap("Metal Map", 2D) = "black" {}_MetalMapV("Metal Map V", Range(0, 1)) = 1_MetalMapIntensity("Metal Map Intensity", Range(0, 1)) = 1_SpecularIntensityMetal("Specular Layer Metal", Float) = 1_ShinnessMetal("Specular Metal Shinness", Range(5, 30)) = 5float _MetalMapV;float _MetalMapIntensity;float _ShinnessMetal;float _SpecularIntensityMetal;half3 specular = 0.f;// 采样金属光泽half4 metalTex = SAMPLE_TEXTURE2D(_MetalMap, sampler_MetalMap, mul(UNITY_MATRIX_V, normalW).xy).r; // 金属高光始终随相机移动metalTex = saturate(metalTex);metalTex = step(_MetalMapV, metalTex) * _MetalMapIntensity; // 控制metal强度和范围// 金属if(specularLayer >= 200 && specularLayer < 260){ specular = pow(NoH, _ShinnessMetal) * _SpecularIntensityMetal; #if defined ENABLE_SPECULAR_INTENISTY_MASK5 specular *= stepSpecularMask; #endif specular += metalTex.rgb; specular *= diffuseTex.rgb;}头发层

这里像上面简单地处理是不行的,因为头发和其他材质在同一层,在这里需要对其进行分离

[KeywordEnum(BODY, HAIR)] _ENABLE_SPECULAR("Enable specular body or hair?", Int) = 1#pragma shader_feature_local _ENABLE_SPECULAR_HAIR _ENABLE_SPECULAR_BODYhalf3 stepSpecular2 = 0.f;// 头发高光if(specularLayer > 150 && specularLayer < 200){ #if defined _ENABLE_SPECULAR_HAIR // 分离头发的高光 stepSpecular1 = step(1 - _SpecularRangeLayer.w, NoH) * _SpecularIntensityLayer.w; stepSpecular1 = lerp(stepSpecular1, 0, stepSpecularMask); stepSpecular1 *= diffuseTex.rgb; // 头发的高光 stepSpecular2 = step(1 - _SpecularRangeLayer.w, NoH) * _SpecularIntensityLayer.w; #ifdef ENABLE_SPECULAR_INTENISTY_MASK4 stepSpecular2 *= stepSpecularMask; #endif stepSpecular2 *= diffuseTex.rgb; // body 高光 #else stepSpecular1 = step(1 - _SpecularRangeLayer.w, NoH) * _SpecularIntensityLayer.w; #if defined ENABLE_SPECULAR_INTENISTY_MASK3 stepSpecular1 *= stepSpecularMask; #endif stepSpecular1 *= diffuseTex.rgb; #endif}游戏内的头发高光:亮部有高光,部分暗部也包含高光,其余暗部则消失,且高光随视角变化

如何实现呢?这里的思路依旧是使用step(),不过需要两个变量:一个控制视角范围,一个控制高光范围

_HairSpecularRange("Hair Specular Range", Range(0, 1)) = 1 // 控制高光范围_ViewSpecularRange("View Specular Range", Range(0, 1)) = 1 // 控制视角对高光的影响_HairSpecularIntensity("Hair Specular Intensity", float) = 10[HDR]_HairSpecularColor("Hair Specular Color", Color) = (1, 1, 1, 1) float _HairSpecularRange;float _ViewSpecularRange;float _HairSpecularIntensity;float3 _HairSpecularColor;float specularRange = step(1 - _HairSpecularRange, NoH);// 控制高光范围float viewRange = step(1 - _ViewSpecularRange, NoV); // 控制视角范围half3 hairSpecular = specularRange * viewRange * stepSpecular2 * _HairSpecularIntensity;hairSpecular *= diffuseTex.rgb * _HairSpecularColor;

左边最暗的是模型diffuse自带的基色,明暗交界处有些许高光,右边明部有明显的高光

Lerp分层高光

最后为了防止交界处高光过度的生硬,需要对它们进行lerp

实现

specular = lerp(stepSpecular1, specular, lightMapTex.r);// 因为金属层接近1specular = lerp(0, specular, lightMapTex.r);// 削弱specular = lerp(0, specular, rampValue);// 阴影处无高光

等宽屏幕空间边缘光

要了解什么是等宽屏幕空间边缘光,就需要知道如何获取深度图?什么是depth offset?

- 关于深度图的获取,曾在其他篇章提到过,可以看这https://www.cnblogs.com/chenglixue/p/17811333.html

- 什么是depth offset呢?简单来说就是对深度图的uv沿着法线方向偏移,这一部分偏移值即为depth offset,这也是等宽屏幕空间边缘光实现的关键所在

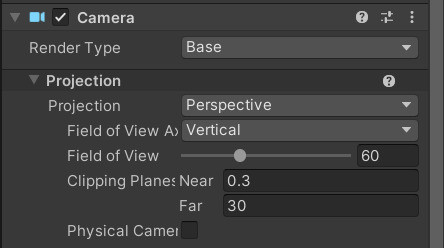

注意!如果在URP中采样深度图的步骤未出错,但深度图一片黑,很有可能是相机的远平面过远,或模型离相机太远(原因可以看这篇文章)

实现

[Toggle(ENABLE_RIMLIGHT)] _Enable_RimLight("Enable RimLight", Int) = 1// 是否启用边缘光_RimLightUVOffsetMul("Rim Light Width", Range(0, 0.1)) = 0.1// 边缘光宽度_RimLightThreshold("Rim Light Threshold", Range(0, 1)) = 0.5// 阈值[HDR]_RimLightColor("Rim Light Color", Color) = (1, 1, 1, 1)float _RimLightUVOffsetMul;float _RimLightThreshold;half4 _RimLightColor;TEXTURE2D_X_FLOAT(_CameraDepthTexture); SAMPLER(sampler_CameraDepthTexture); //深度图 const VertexPositionInputs vertexPositionInput = GetVertexPositionInputs(vsInput.positionL);const VertexNormalInputs vertexNormalInput = GetVertexNormalInputs(vsInput.normalL, vsInput.tangentL);vsOutput.positionH = vertexPositionInput.positionCS;vsOutput.positionW = vertexPositionInput.positionWS;vsOutput.positionV = vertexPositionInput.positionVS;vsOutput.positionNDC = vertexPositionInput.positionNDC;vsOutput.normalW.xyz = vertexNormalInput.normalWS;half3 rimLight = 0.f; float3 normalV = normalize(mul((float3x3)UNITY_MATRIX_V, normalW));// 在view space进行偏移(z不能变化,因为HClip space下的w = view space下的-z,必须一致才能变换到正确的viewport)float3 offsetPosV = float3(positionV.xy + normalV.xy * _RimLightUVOffsetMul, positionV.z);// 偏移后需要将其转换到viewport下float4 offsetPosH = TransformWViewToHClip(offsetPosV);float4 offsetPosVP = TransformHClipToViewPort(offsetPosH);float depth = positionNDC.z / positionNDC.w;float offsetDepth = SAMPLE_TEXTURE2D_X(_CameraDepthTexture, sampler_CameraDepthTexture, offsetPosVP).r;float linearDepth = LinearEyeDepth(depth, _ZBufferParams); // depth转换为线性float linearOffsetDepth = LinearEyeDepth(offsetDepth, _ZBufferParams); // 偏移后的float depthDiff = linearOffsetDepth - linearDepth;float rimLightMask = step(_RimLightThreshold * 0.1, depthDiff);rimLight = _RimLightColor.rgb * _RimLightColor.a * rimLightMask;outputColor += rimLight;

描边

原神使用了LightMap的Alpha通道/vertex corlor制作了彩色的描边,但这里官方的模型并未提供,没法做咯。不过笔者使用Colin大佬的方法,效果还阔以

实现

[Toggle(OUTLINE_FIXED)] _Outline_Fixed("Outline Fixed", Int) = 1_OutlineWidth("Outline Width", Range(0, 1)) = 1[HDR]_OutlineColor("Outline Color", Color) = (1, 1, 1, 1)float _OutlineWidth;half4 _OutlineColor;#pragma shader_feature_local OUTLINE_FIXED Tags{ // URP 中使用多Pass,需要将LightMode设为SRPDefaultUnlit "LightMode" = "SRPDefaultUnlit"}Cull Front// 保证描边不遮挡模型PSInput OutlineVS(VSInput vsInput){ PSInput vsOutput; vsOutput.positionH = TransformObjectToHClip(vsInput.positionL); float4 scaledSSParam = GetScaledScreenParams(); float scaleSS = abs(scaledSSParam.x / scaledSSParam.y); vsOutput.normalW.xyz = TransformObjectToWorldNormal(vsInput.normalL); float3 normalH = TransformWorldToHClip(vsOutput.normalW.xyz); float2 extendWidth = normalize(normalH.xy) * _OutlineWidth * 0.01; extendWidth.x /= scaleSS; // 宽高比可能不是1,需要将其变为1,消除影响 #if defined OUTLINE_FIXED // 描边宽度固定 vsOutput.positionH.xy += extendWidth * vsOutput.positionH.w; // 变换至NDC空间(因为NDC空间是标准化空间,距离是固定的) #else // 描边宽度随相机到物体的距离变化 vsOutput.positionH.xy += extendWidth; #endif return vsOutput;}half4 OutlinePS(PSInput psInput) : SV_TARGET{ return _OutlineColor;}效果

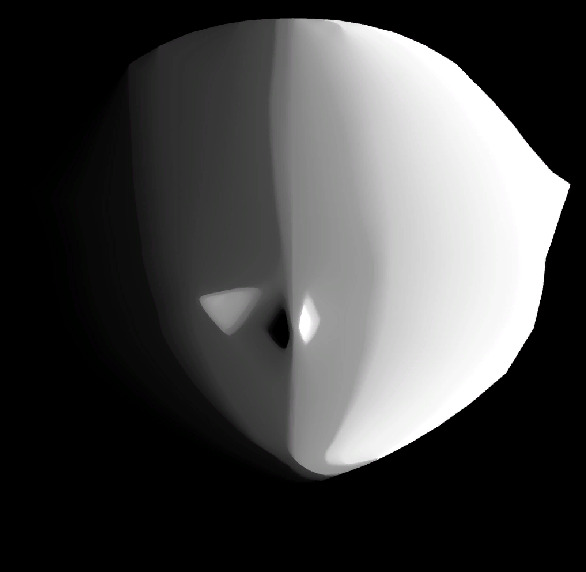

SDF面部阴影

原神的面部阴影通过SDF快速实现面部阴影

思路:上图SDF中白色表示的是光照值的阈值,若达到这个值说明该点处于阴影中。且可以看到左右阈值不同,需要采样左右颠倒的SDF图,再根据光照在左边还是右边来采用对应的图

实现

[Toggle(ENABLE_FACE_SHAODW)] _EnableFaceShadow("Enable Face Shadow", Int) = 1_FaceMap("Face Map", 2D) = "white" {}[HDR]_FaceShadowColor("Face Shadow Color", Color) = (1, 1, 1, 1)_LerpFaceShadow("Lerp Face Shadow", Range(0, 1)) = 1 half4 _FaceShadowColor;float _LerpFaceShadow;TEXTURE2D(_FaceMap); SAMPLER(sampler_FaceMap);#pragma shader_feature_local ENABLE_FACE_SHAODW////////////////////////////////// 面部阴影////////////////////////////////#if defined ENABLE_FACE_SHAODW half3 faceColor = diffuseTex;float isShadow = 0;// 对应灯光从模型正前到左后half4 l_FaceTex = SAMPLE_TEXTURE2D(_FaceMap, sampler_FaceMap, psInput.uv.xy);// 对应灯光从模型正前到右后half4 r_FaceTex = SAMPLE_TEXTURE2D(_FaceMap, sampler_FaceMap, float2(1 - psInput.uv.x, psInput.uv.y));float2 leftDir = normalize(TransformObjectToWorldDir(float3(-1, 0, 0)).xz); // 模型正左float2 frontDir = normalize(TransformObjectToWorldDir(float3(0, 0, 1)).xz); // 模型正前float angleDiff = 1 - saturate(dot(frontDir, mainLightDirW.xz) * 0.5 + 0.5); // 前向和灯光的角度差float ilm = dot(mainLightDirW.xz, leftDir) > 0 ? l_FaceTex.r : r_FaceTex.r; // 确定facetex// 角度差和SDF阈值进行判断isShadow = step(ilm, angleDiff);float bias = smoothstep(0, _LerpFaceShadow, abs(angleDiff - ilm)); // 阴影边界平滑,否则会出现锯齿if(angleDiff > 0.99 || isShadow == 1) faceColor = lerp(diffuseTex, diffuseTex * _FaceShadowColor.rgb, bias);outputColor += faceColor;#endif效果

自发光

原神使用diffuse.a作为自发光的mask

实现

[Toggle(ENABLE_EMISSION)] _Enable_Emission("Enable Emission", Int) = 1_EmissionIntensity("Emission Intensity", Range(0, 5)) = 1float _EmissionIntensity;#pragma shader_feature_local ENABLE_EMISSION////////////////////////////////// 自发光////////////////////////////////half3 emission = 0.f;#if defined ENABLE_EMISSIONemission = diffuseTex.rgb * diffuseTex.a * _EmissionIntensity;#endif

Bloom

这里bloom的模糊算法我采用的是DualBlur,因为它有最好的性能和最好的效果

Bloom流程:提取亮度部分->对提取部分进行模糊->将模糊后的部分和原图进行叠加

因为bloom属于后处理,所以需要实现render feature

shader

{ Properties { _MainTex("Main Tex", 2D) = "white" {} } SubShader { Tags { "RenderPipeline" = "UniversalPipeline" } Cull Off ZWrite Off ZTest Always HLSLINCLUDE #include "Assets/Shader/PostProcess/Bloom.hlsl" ENDHLSL Pass { Name "Extract Luminanice" HLSLPROGRAM #pragma vertex ExtractLumVS #pragma fragment ExtractLumPS ENDHLSL } Pass { NAME "Down Sample" HLSLPROGRAM #pragma vertex DownSampleVS #pragma fragment DownSamplePS ENDHLSL } Pass { NAME "Up Sample" HLSLPROGRAM #pragma vertex UpSampleVS #pragma fragment UpSamplePS ENDHLSL } Pass { Name "add blur with source RT" HLSLPROGRAM #pragma vertex BloomVS #pragma fragment BloomPS ENDHLSL } }}HLSL

#pragma once#include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Core.hlsl"// -------------------------------------------- variable definition --------------------------------------------CBUFFER_START(UnityPerMaterial)float4 _MainTex_TexelSize;CBUFFER_ENDhalf _BlurIntensity;float _LuminanceThreshold;half4 _BloomColor;float _BloomIntensity;TEXTURE2D(_MainTex); // 模糊后的RTSAMPLER(sampler_MainTex);TEXTURE2D(_SourceTex); // 原RTSAMPLER(sampler_SourceTex);struct VSInput{ float4 positionL : POSITION; float2 uv : TEXCOORD0;};struct PSInput{ float4 positionH : SV_POSITION; float2 uv : TEXCOORD0; float4 uv01 : TEXCOORD1; float4 uv23 : TEXCOORD2; float4 uv45 : TEXCOORD3; float4 uv67 : TEXCOORD4;};// -------------------------------------------- function definition --------------------------------------------half ExtractLuminance(half3 color){ return 0.2125 * color.r + 0.7154 * color.g + 0.0721 * color.b;}////////////////////////////////// 提取亮度////////////////////////////////PSInput ExtractLumVS(VSInput vsInput){ PSInput vsOutput; vsOutput.positionH = TransformObjectToHClip(vsInput.positionL); vsOutput.uv = vsInput.uv; return vsOutput;}half4 ExtractLumPS(PSInput psInput) : SV_TARGET{ half3 outputColor = 0.f; half4 mainTex = SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv); half luminanceFactor = saturate(ExtractLuminance(mainTex) - _LuminanceThreshold); outputColor += mainTex * luminanceFactor; return half4(outputColor, 1.f);}////////////////////////////////// DualBlur模糊////////////////////////////////PSInput DownSampleVS(VSInput vsInput){ PSInput vsOutput; vsOutput.positionH = TransformObjectToHClip(vsInput.positionL); // 在D3D平台下,若开启抗锯齿,_TexelSize.y会变成负值 #ifdef UNITY_UV_STARTS_AT_TOP if(_MainTex_TexelSize.y < 0) vsInput.uv.y = 1 - vsInput.uv.y; #endif vsOutput.uv = vsInput.uv; vsOutput.uv01.xy = vsInput.uv + float2(1.f, 1.f) * _MainTex_TexelSize.xy * _BlurIntensity; vsOutput.uv01.zw = vsInput.uv + float2(-1.f, -1.f) * _MainTex_TexelSize.xy * _BlurIntensity; vsOutput.uv23.xy = vsInput.uv + float2(1.f, -1.f) * _MainTex_TexelSize.xy * _BlurIntensity; vsOutput.uv23.zw = vsInput.uv + float2(-1.f, 1.f) * _MainTex_TexelSize.xy * _BlurIntensity; return vsOutput;}float4 DownSamplePS(PSInput psInput) : SV_TARGET{ float4 outputColor = 0.f; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv.xy) * 0.5; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv01.xy) * 0.125; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv01.zw) * 0.125; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv23.xy) * 0.125; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv23.zw) * 0.125; return outputColor;}PSInput UpSampleVS(VSInput vsInput){ PSInput vsOutput; vsOutput.positionH = TransformObjectToHClip(vsInput.positionL); #ifdef UNITY_UV_STARTS_AT_TOP if(_MainTex_TexelSize.y < 0.f) vsInput.uv.y = 1 - vsInput.uv.y; #endif vsOutput.uv = vsInput.uv; // 1/12 vsOutput.uv01.xy = vsInput.uv + float2(0, 1) * _MainTex_TexelSize.xy * _BlurIntensity; vsOutput.uv01.zw = vsInput.uv + float2(0, -1) * _MainTex_TexelSize.xy * _BlurIntensity; vsOutput.uv23.xy = vsInput.uv + float2(1, 0) * _MainTex_TexelSize.xy * _BlurIntensity; vsOutput.uv23.zw = vsInput.uv + float2(-1, 0) * _MainTex_TexelSize.xy * _BlurIntensity; // 1/6 vsOutput.uv45.xy = vsInput.uv + float2(1, 1) * 0.5 * _MainTex_TexelSize.xy * _BlurIntensity; vsOutput.uv45.zw = vsInput.uv + float2(-1, -1) * 0.5 * _MainTex_TexelSize.xy * _BlurIntensity; vsOutput.uv67.xy = vsInput.uv + float2(1, -1) * 0.5 * _MainTex_TexelSize.xy * _BlurIntensity; vsOutput.uv67.zw = vsInput.uv + float2(-1, 1) * 0.5 * _MainTex_TexelSize.xy * _BlurIntensity; return vsOutput;}float4 UpSamplePS(PSInput psInput) : SV_TARGET{ float4 outputColor = 0.f; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv01.xy) * 1/12; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv01.zw) * 1/12; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv23.xy) * 1/12; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv23.zw) * 1/12; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv45.xy) * 1/6; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv45.zw) * 1/6; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv67.xy) * 1/6; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv67.zw) * 1/6; return outputColor;}////////////////////////////////// 将模糊后的RT与原图叠加////////////////////////////////PSInput BloomVS(VSInput vsInput){ PSInput vsOutput; vsOutput.positionH = TransformObjectToHClip(vsInput.positionL); vsOutput.uv = vsInput.uv; return vsOutput;}half4 BloomPS(PSInput psInput) : SV_TARGET{ half3 outputColor = 0.f; half4 sourceTex = SAMPLE_TEXTURE2D(_SourceTex, sampler_SourceTex, psInput.uv); half4 blurTex = SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv); outputColor += sourceTex.rgb + blurTex.rgb * _BloomColor * _BloomIntensity; return half4(outputColor, 1.f);}RenderFeature

using System;using UnityEngine;using UnityEngine.Rendering;using UnityEngine.Rendering.Universal;public class BloomRenderFeature : ScriptableRendererFeature{ // render feature 显示内容 [System.Serializable] public class PassSetting { [Tooltip("profiler tag will show up in frame debugger")] public readonly string m_ProfilerTag = "Bloom Pass"; [Tooltip("Pass安插位置")] public RenderPassEvent m_passEvent = RenderPassEvent.AfterRenderingTransparents; [Tooltip("降低分辨率")] [Range(1, 5)] public int m_Downsample = 1; [Tooltip("模糊迭代次数")] [Range(1, 5)] public int m_PassLoop = 2; [Tooltip("模糊强度")] [Range(0, 10)] public float m_BlurIntensity = 1; [Tooltip("亮度阈值")] [Range(0, 1)] public float m_LuminanceThreshold = 0.5f; [Tooltip("Bloom颜色")] public Color m_BloomColor = new Color(1.0f, 1.0f, 1.0f, 1.0f); [Tooltip("Bloom强度")] [Range(0, 10)] public float m_BloomIntensity = 1; } class BloomRenderPass : ScriptableRenderPass { // 用于存储pass setting private BloomRenderFeature.PassSetting m_passSetting; private RenderTargetIdentifier m_TargetBuffer, m_TempBuffer; private Material m_Material; static class ShaderIDs { // int 相较于 string可以获得更好的性能,因为这是预处理的 internal static readonly int m_BlurIntensityProperty = Shader.PropertyToID("_BlurIntensity"); internal static readonly int m_LuminanceThresholdProperty = Shader.PropertyToID("_LuminanceThreshold"); internal static readonly int m_BloomColorProperty = Shader.PropertyToID("_BloomColor"); internal static readonly int m_BloomIntensityProperty = Shader.PropertyToID("_BloomIntensity"); internal static readonly int m_TempBufferProperty = Shader.PropertyToID("_BufferRT1"); internal static readonly int m_SourceBufferProperty = Shader.PropertyToID("_SourceTex"); } // 降采样和升采样的ShaderID struct BlurLevelShaderIDs { internal int downLevelID; internal int upLevelID; } static int maxBlurLevel = 16; private BlurLevelShaderIDs[] blurLevel; // 用于设置material 属性 public BloomRenderPass(BloomRenderFeature.PassSetting passSetting) { this.m_passSetting = passSetting; renderPassEvent = m_passSetting.m_passEvent; if (m_Material == null) m_Material = CoreUtils.CreateEngineMaterial("Custom/PP_Bloom"); // 基于pass setting设置material Properties m_Material.SetFloat(ShaderIDs.m_BlurIntensityProperty, m_passSetting.m_BlurIntensity); m_Material.SetFloat(ShaderIDs.m_LuminanceThresholdProperty, m_passSetting.m_LuminanceThreshold); m_Material.SetColor(ShaderIDs.m_BloomColorProperty, m_passSetting.m_BloomColor); m_Material.SetFloat(ShaderIDs.m_BloomIntensityProperty, m_passSetting.m_BloomIntensity); } // Gets called by the renderer before executing the pass. // Can be used to configure render targets and their clearing state. // Can be used to create temporary render target textures. // If this method is not overriden, the render pass will render to the active camera render target. public override void OnCameraSetup(CommandBuffer cmd, ref RenderingData renderingData) { // Grab the color buffer from the renderer camera color target m_TargetBuffer = renderingData.cameraData.renderer.cameraColorTarget; blurLevel = new BlurLevelShaderIDs[maxBlurLevel]; for (int t = 0; t < maxBlurLevel; ++t) // 16个down level id, 16个up level id { blurLevel[t] = new BlurLevelShaderIDs { downLevelID = Shader.PropertyToID("_BlurMipDown" + t), upLevelID = Shader.PropertyToID("_BlurMipUp" + t) }; } } // The actual execution of the pass. This is where custom rendering occurs public override void Execute(ScriptableRenderContext context, ref RenderingData renderingData) { // Grab a command buffer. We put the actual execution of the pass inside of a profiling scope CommandBuffer cmd = CommandBufferPool.Get(); // camera target descriptor will be used when creating a temporary render texture RenderTextureDescriptor descriptor = renderingData.cameraData.cameraTargetDescriptor; // 设置 temporary render texture的depth buffer的精度 descriptor.depthBufferBits = 0; using (new ProfilingScope(cmd, new ProfilingSampler(m_passSetting.m_ProfilerTag))) { // 初始图像作为down的初始图像 RenderTargetIdentifier lastDown = m_TargetBuffer; cmd.GetTemporaryRT(ShaderIDs.m_SourceBufferProperty, descriptor, FilterMode.Bilinear); cmd.CopyTexture(m_TargetBuffer, ShaderIDs.m_SourceBufferProperty); // 将原RT复制给_SourceTex //////////////////////////////// // 提取亮度开始 //////////////////////////////// cmd.GetTemporaryRT(ShaderIDs.m_TempBufferProperty, descriptor, FilterMode.Bilinear); m_TempBuffer = new RenderTargetIdentifier(ShaderIDs.m_TempBufferProperty); cmd.Blit(m_TargetBuffer, m_TempBuffer, m_Material, 0); cmd.Blit(m_TempBuffer, lastDown); //////////////////////////////// // 提取亮度结束 //////////////////////////////// //////////////////////////////// // 模糊开始 //////////////////////////////// // 降采样 descriptor.width /= m_passSetting.m_Downsample; descriptor.height /= m_passSetting.m_Downsample; // 计算down sample for (int i = 0; i 0; --i) { int midUp = blurLevel[i].upLevelID; cmd.Blit(lastUp, midUp, m_Material, 2); lastUp = midUp; } // 将最终的up sample RT 复制给 lastDown cmd.Blit( lastUp, m_TargetBuffer, m_Material, 2); //////////////////////////////// // 模糊结束 //////////////////////////////// //////////////////////////////// // 模糊原图叠加开始 //////////////////////////////// cmd.Blit(m_TargetBuffer, m_TempBuffer, m_Material, 3); cmd.Blit(m_TempBuffer, m_TargetBuffer); //////////////////////////////// // 模糊原图叠加结束 //////////////////////////////// } // Execute the command buffer and release it context.ExecuteCommandBuffer(cmd); CommandBufferPool.Release(cmd); } // Called when the camera has finished rendering // release/cleanup any allocated resources that were created by this pass public override void OnCameraCleanup(CommandBuffer cmd) { if(cmd == null) throw new ArgumentNullException("cmd"); // Since created a temporary render texture in OnCameraSetup, we need to release the memory here to avoid a leak for (int i = 0; i < m_passSetting.m_PassLoop; ++i) { cmd.ReleaseTemporaryRT(blurLevel[i].downLevelID); cmd.ReleaseTemporaryRT(blurLevel[i].upLevelID); } cmd.ReleaseTemporaryRT(ShaderIDs.m_TempBufferProperty); } } public PassSetting m_Setting = new PassSetting(); BloomRenderPass m_DualBlurPass; // 初始化 public override void Create() { m_DualBlurPass = new BloomRenderPass(m_Setting); } // Here you can inject one or multiple render passes in the renderer. // This method is called when setting up the renderer once per-camera. public override void AddRenderPasses(ScriptableRenderer renderer, ref RenderingData renderingData) { // can queue up multiple passes after each other renderer.EnqueuePass(m_DualBlurPass); }}

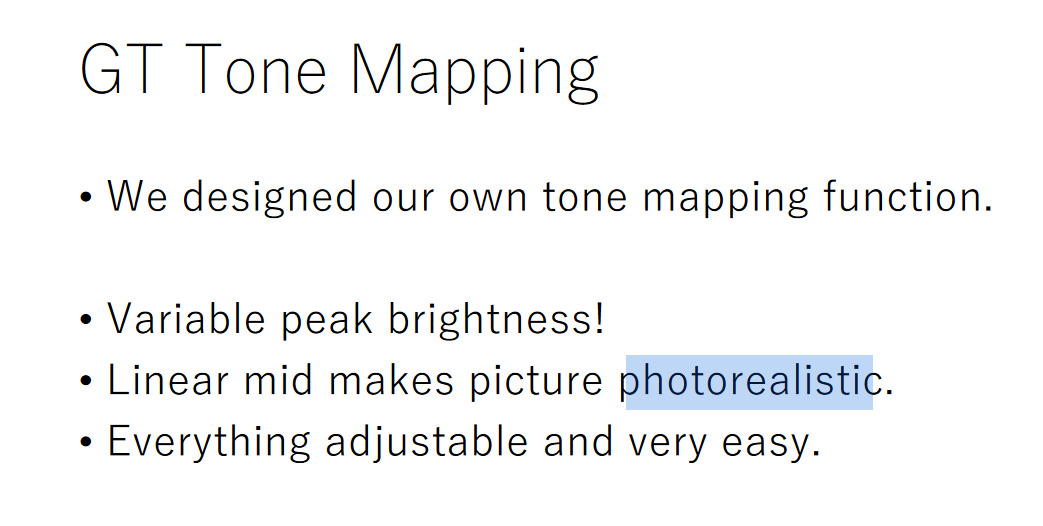

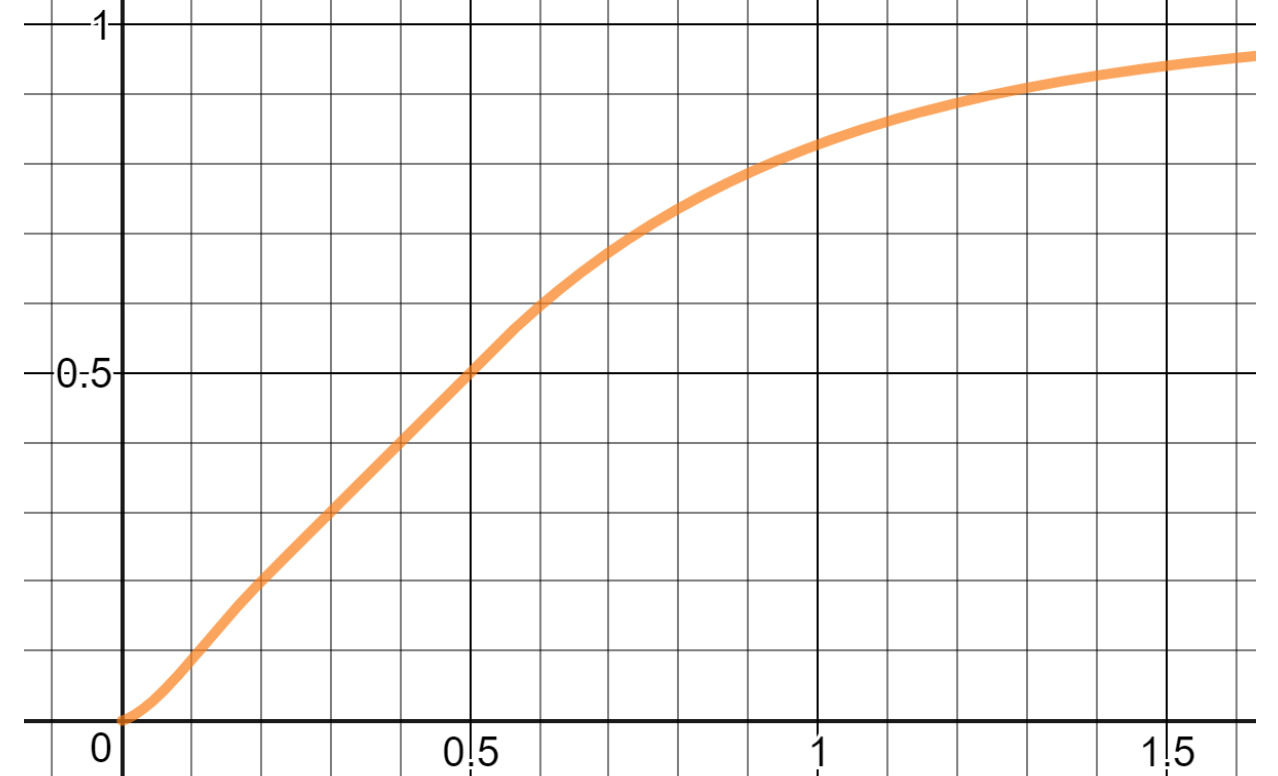

Tonemapping

Tonemapping采用的是GT Tone Mapping,该Tonemapping可以自由地控制峰值亮度、提供linear mid使其具有照片级真实感、各个值都很好调整

为什么不采用ACES RRT+ODT呢?因为ACES ODT的参数是固定的,只提供1000/2000/4000nits;而RRT会进行烘培

且Colin大佬也提到过ACES不适用于卡渲

公式:https://www.desmos.com/calculator/gslcdxvipg?lang=zh-CN

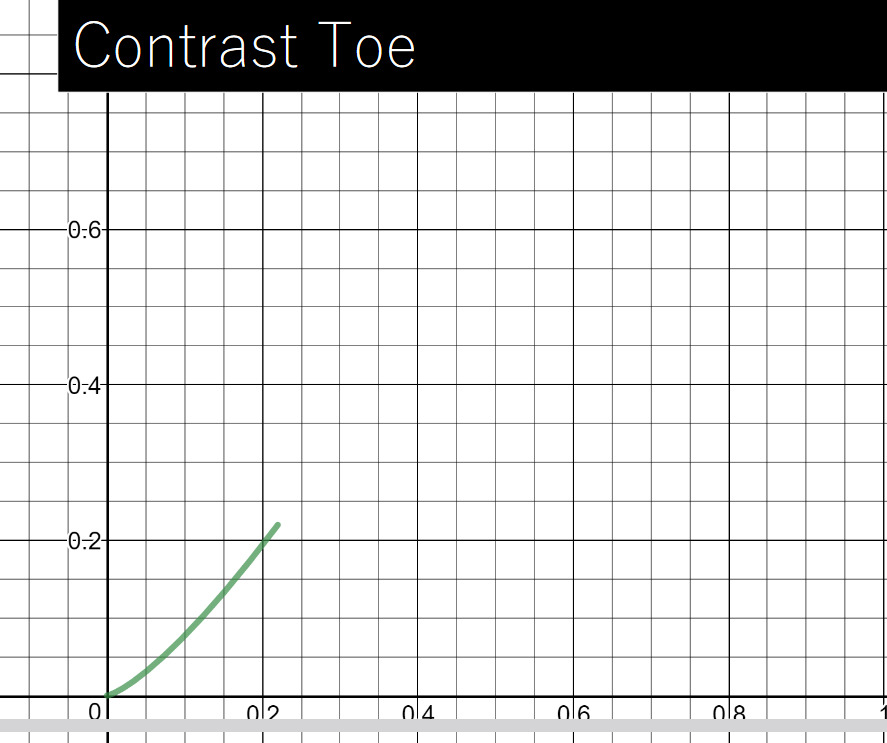

三个重要参数

Contrast adjustable toe

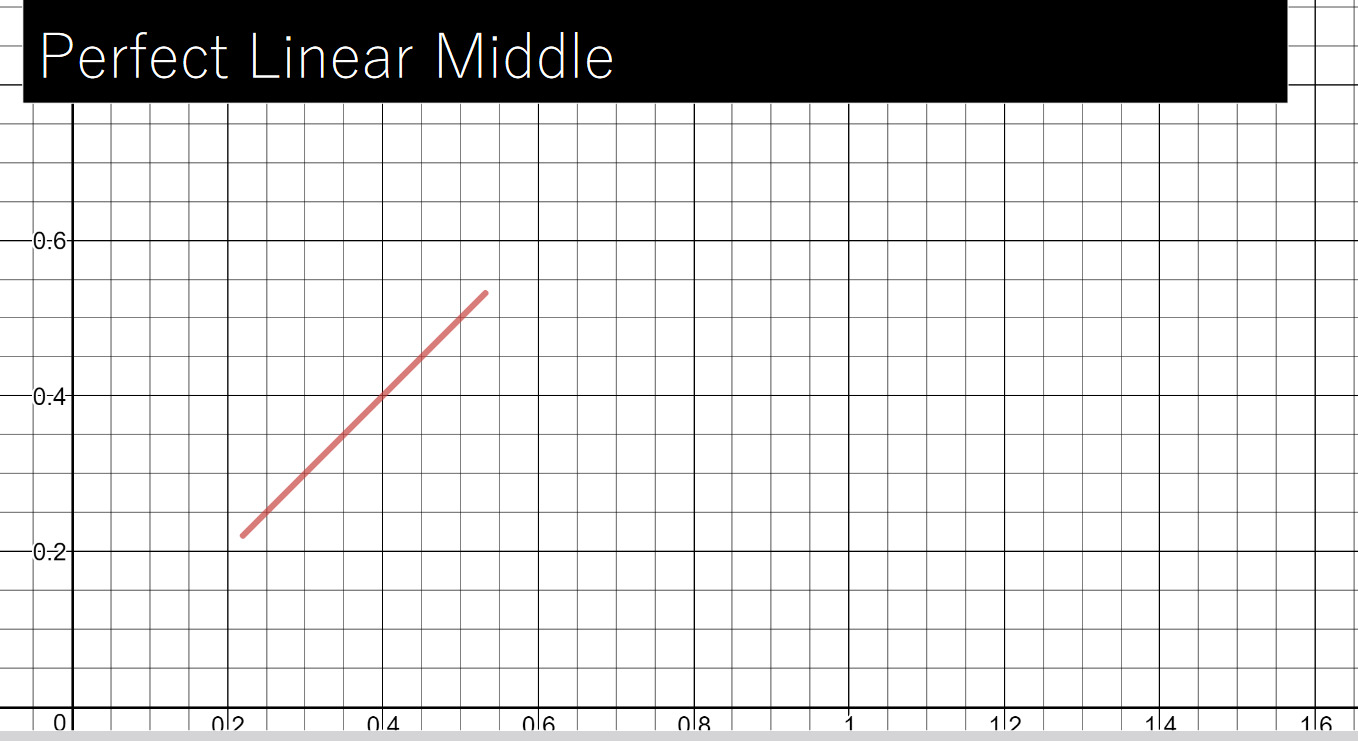

Linear section in middle

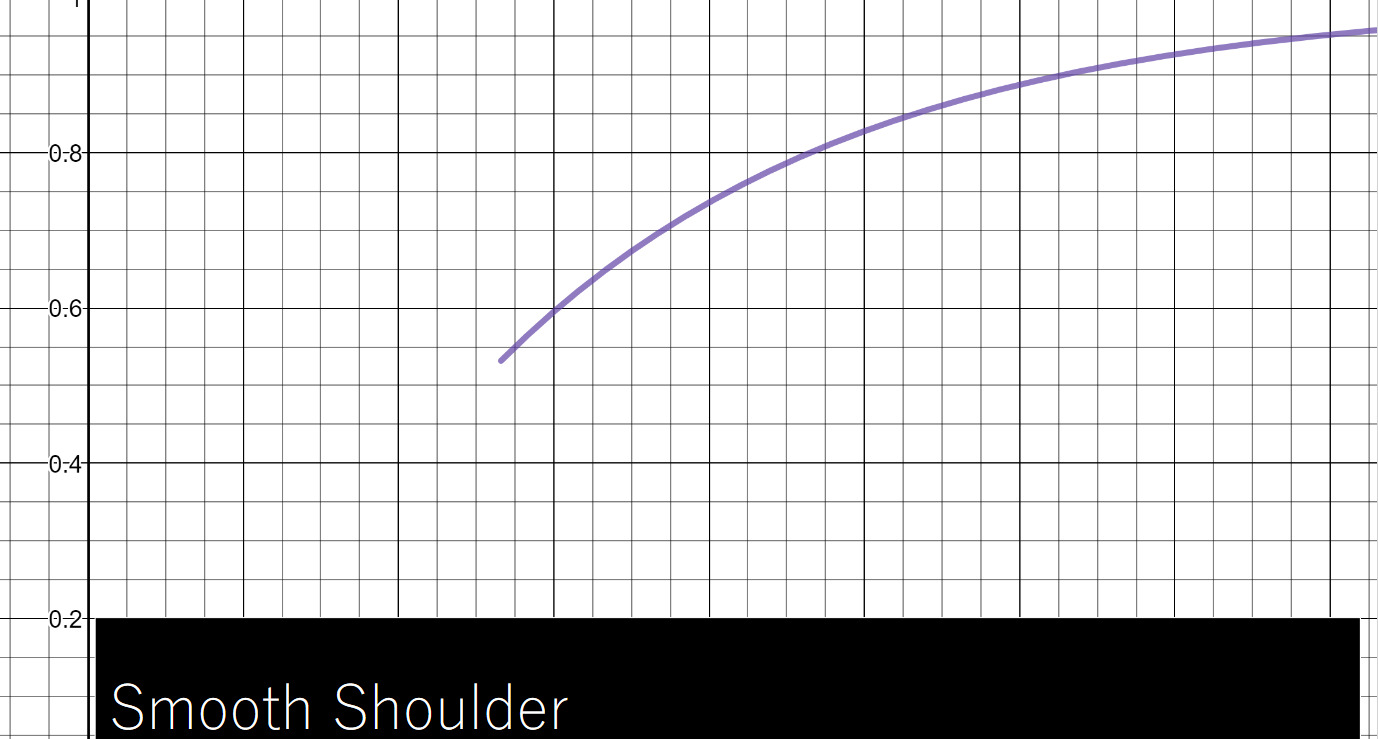

Smooth shoulder

同样tonemapping属于后处理,包含shader和renderfeature两部分

Shader

{ Properties { _MainTex("Main Tex", 2D) = "white" {} } SubShader { Tags { "RenderPipeline" = "UniversalPipeline" } Cull Off ZWrite Off ZTest Always HLSLINCLUDE #include "Assets/Shader/PostProcess/Tonemapping.hlsl" ENDHLSL Pass { Name "Tone mapping" HLSLPROGRAM #pragma vertex TonemappingVS #pragma fragment TonemappingPS ENDHLSL } }}HLSL

#pragma once#include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Core.hlsl"// -------------------------------------------- variable definition --------------------------------------------float _MaxLuminanice;float _Contrast;float _LinearSectionStart;float _LinearSectionLength;float _BlackTightnessC;float _BlackTightnessB;TEXTURE2D(_MainTex);SAMPLER(sampler_MainTex);struct VSInput{ float4 positionL : POSITION; float2 uv : TEXCOORD0;};struct PSInput{ float4 positionH : SV_POSITION; float2 uv : TEXCOORD0;};// -------------------------------------------- function definition --------------------------------------------////////////////////////////////// Tone mapping begin////////////////////////////////static const float e = 2.71828;// smoothstep(x,e0,e1)float WFunction(float x, float e0, float e1){ if(x = e1) return 1.f; float m = (x - e0) / (e1 - e0); return m * m * 3.f - 2.f * m;}// smoothstep(x,e0,e1)float HFunction(float x, float e0, float e1){ if(x = e1) return 1.f; return (x - e0) / (e1 - e0);}// https://www.desmos.com/calculator/gslcdxvipg?lang=zh-CN// https://www.shadertoy.com/view/Xstyznfloat GTHelper(half x){ float P = _MaxLuminanice; // max luminanice[1, 100] float a = _Contrast; // Contrast[1, 5] float m = _LinearSectionStart; // Linear section start float l = _LinearSectionLength; // Linear section length // Black tightness[1,3] & [0, 1] float c = _BlackTightnessC; float b = _BlackTightnessB; // Linear region computation // l0 is the linear length after scale float l0 = (P - m) * l / a; float L0 = m - m / a; float L1 = m + (1 - m) / a; float L_x = m + a * (x - m); // Toe float T_x = m * pow((x / m), c) + b; // Shoulder float S0 = m + l0; float S1 = m + a * l0; float C2 = a * P / (P - S1); float S_x = P - (P - S1) * pow(e, -C2 * (x - S0) / P); float w0_x = 1 - WFunction(x, 0, m); // Toe weight float w2_x = HFunction(x, m + l0, m + l0); // linear weight float w1_x = 1 - w0_x - w2_x; // shoulder weight return T_x * w0_x + L_x * w1_x + S_x * w2_x;}PSInput TonemappingVS(VSInput vsInput){ PSInput vsOutput; vsOutput.positionH = TransformObjectToHClip(vsInput.positionL); vsOutput.uv = vsInput.uv; return vsOutput;}float4 TonemappingPS(PSInput psInput) : SV_TARGET{ float3 outputColor = 0.f; half4 mainTex = SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv); float r = GTHelper(mainTex.r); float g = GTHelper(mainTex.g); float b = GTHelper(mainTex.b); outputColor += float3(r, g, b); return float4(outputColor, mainTex.a);}////////////////////////////////// Tone mapping end////////////////////////////////RenderFeature

using System;using UnityEngine;using UnityEngine.Rendering;using UnityEngine.Rendering.Universal;public class TonemappingRenderFeature : ScriptableRendererFeature{ // render feature 显示内容 [System.Serializable] public class PassSetting { [Tooltip("显示在frame debugger中的标签名")] public readonly string m_ProfilerTag = "Tonemapping Pass"; [Tooltip("安插位置")] public RenderPassEvent m_passEvent = RenderPassEvent.AfterRenderingTransparents; [Tooltip("最大亮度[1, 100]")] [Range(1, 100)] public float m_MaxLuminanice = 1f; [Tooltip("对比度")] [Range(1, 5)] public float m_Contrast = 1f; [Tooltip("线性区域的起点")] [Range(0, 1)] public float m_LinearSectionStart = 0.4f; [Tooltip("线性区域的长度")] [Range(0, 1)] public float m_LinearSectionLength = 0.24f; [Tooltip("Black Tightness C")] [Range(0, 3)] public float m_BlackTightnessC = 1.33f; [Tooltip("Black Tightness B")] [Range(0, 1)] public float m_BlackTightnessB = 0f; } class TonemappingRenderPass : ScriptableRenderPass { // 用于存储pass setting private TonemappingRenderFeature.PassSetting m_passSetting; private RenderTargetIdentifier m_TargetBuffer, m_TempBuffer; private Material m_Material; static class ShaderIDs { // int 相较于 string可以获得更好的性能,因为这是预处理的 internal static readonly int m_MaxLuminaniceID = Shader.PropertyToID("_MaxLuminanice"); internal static readonly int m_ContrastID = Shader.PropertyToID("_Contrast"); internal static readonly int m_LinearSectionStartID = Shader.PropertyToID("_LinearSectionStart"); internal static readonly int m_LinearSectionLengthID = Shader.PropertyToID("_LinearSectionLength"); internal static readonly int m_BlackTightnessCID = Shader.PropertyToID("_BlackTightnessC"); internal static readonly int m_BlackTightnessBID = Shader.PropertyToID("_BlackTightnessB"); internal static readonly int m_TempBufferID = Shader.PropertyToID("_BufferRT1"); } // 用于设置material 属性 public TonemappingRenderPass(TonemappingRenderFeature.PassSetting passSetting) { this.m_passSetting = passSetting; renderPassEvent = m_passSetting.m_passEvent; if (m_Material == null) m_Material = CoreUtils.CreateEngineMaterial("Custom/PP_Tonemapping"); // 基于pass setting设置material Properties m_Material.SetFloat(ShaderIDs.m_MaxLuminaniceID, m_passSetting.m_MaxLuminanice); m_Material.SetFloat(ShaderIDs.m_ContrastID, m_passSetting.m_Contrast); m_Material.SetFloat(ShaderIDs.m_LinearSectionStartID, m_passSetting.m_LinearSectionStart); m_Material.SetFloat(ShaderIDs.m_LinearSectionLengthID, m_passSetting.m_LinearSectionLength); m_Material.SetFloat(ShaderIDs.m_BlackTightnessCID, m_passSetting.m_BlackTightnessC); m_Material.SetFloat(ShaderIDs.m_BlackTightnessBID, m_passSetting.m_BlackTightnessB); } // Gets called by the renderer before executing the pass. // Can be used to configure render targets and their clearing state. // Can be used to create temporary render target textures. // If this method is not overriden, the render pass will render to the active camera render target. public override void OnCameraSetup(CommandBuffer cmd, ref RenderingData renderingData) { // camera target descriptor will be used when creating a temporary render texture RenderTextureDescriptor descriptor = renderingData.cameraData.cameraTargetDescriptor; // Set the number of depth bits we need for temporary render texture descriptor.depthBufferBits = 0; // Enable these if pass requires access to the CameraDepthTexture or the CameraNormalsTexture. // ConfigureInput(ScriptableRenderPassInput.Depth); // ConfigureInput(ScriptableRenderPassInput.Normal); // Grab the color buffer from the renderer camera color target m_TargetBuffer = renderingData.cameraData.renderer.cameraColorTarget; // Create a temporary render texture using the descriptor from above cmd.GetTemporaryRT(ShaderIDs.m_TempBufferID, descriptor, FilterMode.Bilinear); m_TempBuffer = new RenderTargetIdentifier(ShaderIDs.m_TempBufferID); } // The actual execution of the pass. This is where custom rendering occurs public override void Execute(ScriptableRenderContext context, ref RenderingData renderingData) { // Grab a command buffer. We put the actual execution of the pass inside of a profiling scope CommandBuffer cmd = CommandBufferPool.Get(); using (new ProfilingScope(cmd, new ProfilingSampler(m_passSetting.m_ProfilerTag))) { // Blit from the color buffer to a temporary buffer and back Blit(cmd, m_TargetBuffer, m_TempBuffer, m_Material, 0); Blit(cmd, m_TempBuffer, m_TargetBuffer); } // Execute the command buffer and release it context.ExecuteCommandBuffer(cmd); CommandBufferPool.Release(cmd); } // Called when the camera has finished rendering // release/cleanup any allocated resources that were created by this pass public override void OnCameraCleanup(CommandBuffer cmd) { if(cmd == null) throw new ArgumentNullException("cmd"); // Since created a temporary render texture in OnCameraSetup, we need to release the memory here to avoid a leak cmd.ReleaseTemporaryRT(ShaderIDs.m_TempBufferID); } } public PassSetting m_Setting = new PassSetting(); TonemappingRenderPass m_KawaseBlurPass; // 初始化 public override void Create() { m_KawaseBlurPass = new TonemappingRenderPass(m_Setting); } // Here you can inject one or multiple render passes in the renderer. // This method is called when setting up the renderer once per-camera. public override void AddRenderPasses(ScriptableRenderer renderer, ref RenderingData renderingData) { // can queue up multiple passes after each other renderer.EnqueuePass(m_KawaseBlurPass); }}

最终效果

这并非最终效果,还没加阴影和多光源及MMD,后续有时间会在项目中添加

全部代码:https://github.com/chenglixue/Cartoon-Character-Rendering

reference

https://zhuanlan.zhihu.com/p/360229590

https://zhuanlan.zhihu.com/p/547129280

https://ys.mihoyo.com/main/news/detail/25606

https://zhuanlan.zhihu.com/p/420473327

https://zhuanlan.zhihu.com/p/513484597

https://zhuanlan.zhihu.com/p/551629982

https://zhuanlan.zhihu.com/p/389971233

https://www.bilibili.com/read/cv13564810/

http://cdn2.gran-turismo.com/data/www/pdi_publications/PracticalHDRandWCGinGTS_20181222.pdf

https://www.shadertoy.com/view/Xstyzn

https://www.desmos.com/calculator/gslcdxvipg?lang=zh-CN

https://www.youtube.com/watch?v=D9ocVzGJfI8

https://zhuanlan.zhihu.com/p/365339160